Project Description

To empower the client to automate certain warehousing processing activities, reduce errors and improve operational efficiencies through the use of image recognition technologies and robust application design

The Problem

Description

In the domain of MRO (Maintenance, Repair, and Operations) items, warehouse staff members often lack the technical knowledge to correctly identify certain stock items. This leads to items being placed in the incorrect containers, which then leads to picking errors and the requirement of further housekeeping. Not only is this time-consuming, but it also leads to millions of rands being lost per annum.

The Solution

Description

The solution comes in the form of a user-friendly, cross-platform application that gives the user the ability to capture or select an image of an item and, through the use of Computer Vision and Artificial Intelligence, have the application classify the item in the image. Both a desktop web app and native mobile app is used to provide the solution, which also includes basic stock counting and weight analysis functionality. The solution is abstracted further through the Dashboard API, which allows developers and interested clients to create their own custom image classification model for use in their products, in order to meet their specific needs. No high-level knowledge is required to produce these custom models.

User Story

1

Bob is part of the warehouse staff. He cannot identify every stock item he needs to.

2

Bob wants to identify what a certain item is so that he can handle it accordingly.

3

Bob uses the Ninshiki app on his phone to identify the class or type of the item.

4

Ninshiki uses Artificial Intelligence and Computer Vision to predict the class of the item.

5

Bob gets the item's class from the app, and can now correctly handle the item. Problem solved!

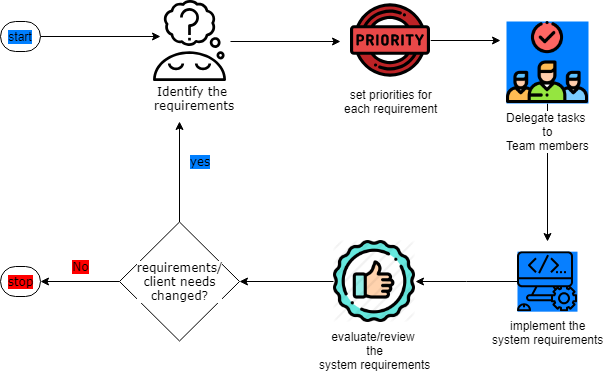

The process

An Agile development process (Scrum methodology) was followed, where the implementation of the system was broken into sprints. Each sprint lasted two or three weeks and included (pre-) sprint planning and (post-) sprint review meetings, as well as regular weekly meetings. The process is shown in the flowchart below.